Binary and Encoding Formats

Table of contents

- 1.0. - Base Systems

- 2.0. - Binary, Bits and Bytes

- 3.0. - Hexadecimal

- 4.0. - Endianness

- 5.0. - ASCII

- 6.0. - Unicode

- 7.0. - Base64

- 8.0. - Percent-Encoding (URL Encoding)

- 9.0. - Binary & Encoding in JavaScript

- 10.0 - Wrapping up

People are good with text, computers are good with numbers

When people do things with computers, they tend to work in text forms, whether that's programs, or whether that is some other input that they give to the computer. The idea with character encoding is that the computers actually use numbers. We take these characters, we assign them numbers. Then we figure out a way to transcribe those numbers into a list of characters. That whole process of going from text to a number is known as encoding. The reverse step is known as decoding. But before we can truly start, let's have a quick refresher on some topics.

1.0. - Base Systems

You know how we count things using numbers from 0 to 9, and then start over again after reaching 10 right? That's because we're using a place value system called base-10, or decimal system. The '10' just indicates that there are ten unique digits (0 to 9), and every time we use all those up, we need an extra place to keep counting. We're working here with powers of 10.

Did you know? The word 'decimal' comes from the Latin word 'dec' which means 'ten'.

The "base" in any counting system tells us how many different numbers or symbols we can use before we need to move to the next place. A "base" is also known as a "radix"

Let's make this simple with a table. If we want to write the number 345 in base-10, it would look like this:

| 100's place | 10's place | 1's place |

| 10^2 | 10^1 | 10^0 (= 1) |

| 3 | 4 | 5 |

In our everyday counting, each place represents a power of 10.

But that's not the only way to count. Computers, for example, use a base-2 system (binary) which only uses 0 and 1. There are other base systems like base-8 (octal) or base-16 (hexadecimal) used in specific areas in math and computing.

Let's try base-8. This only has eight unique digits (0 to 7), and every time we use those up, we add an extra place. In base-8, we use powers of 8. For example, 8^0 =1, 8^1 = 8, 8^2 = 64, and so on. If we wanted to convert our base-10 number 345 to base-8, here's how we would do it:

First, find how many 64s (or

8^2places) fit into 345.345 / 64is 5 (with some left over). So we write a '5'.Our remainder, which is

345 - (5 x 64), equals 25. Repeat the process this time with8^1or 8.25 / 8is 3 with leftovers. Write down '3' next to our '5'.With leftovers again,

25 - (3 x 8), we get 1. This is our final digit. Write down '1'.

Add those numbers together (5, 3, and 1) and we get 531 in base-8! So, counting to 345 for us in base-10 is like counting to 531 for someone who only counts up to 7 (0 to 7) in base-8. Confusing? It can be, but it's just like translating between different human languages.

| 64's place | 8's place | 1's place |

| 8^2 | 8^1 | 8^0 (= 1) |

| 5 | 3 | 1 |

After base-10, however, we run out of digits to represent the numbers, so we have to use letters, where A = 10, B = 11, C = 12, etc. So the sequence for numbers written in Hexadecimal (base-16) is 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, F, followed by 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 1A, 1B, 1C, 1D, 1E, 1F, 20, 21, ... etc.

In general, you can have a base system for any natural number you like. It just changes how you represent numbers and count things.

In the end, it's all about coming up with different ways to represent and work with numbers, just like how there are different languages to represent and communicate ideas.

2.0. - Binary, Bits and Bytes

Computers primarily make use of a base-2 system (binary) due to the ease of its implementation in electronic circuits in its early days. Simply put, an electronic switch can either be off = 0 or on = 1. Nowadays, the operations performed by a computer at its most fundamental level consist of turning billions of such switches on and off.

The binary system in computer systems is represented by a bit, which is short for binary digit. The bit is the smallest unit of data a computer can handle and can only represent two distinct values, either 0 or 1. However, a bit by itself holds very little practical use.

To increase the range of numbers that can be represented, bits are grouped together, thereby generating a multitude of unique combinations. The total number of combinations achievable by grouping n bits can be computed using the formula 2^n. For instance:

Two grouped bits can generate four unique combinations (

2^2 = 4):0 0= 00 1= 11 0= 21 1= 3

Three grouped bits can generate eight unique combinations (

2^3 = 8):0 0 0= 00 0 1= 10 1 0= 20 1 1= 31 0 0= 41 0 1= 51 1 0= 61 1 1= 7

And so on.

It's important to not confuse above-decimal numbers (

= X) with base-4 (quaternary) or base-8 (octal) systems. We're still using base-2 (binary) systems but added decimal numbers to make the number of combinations clear.

Commonly, bits are grouped in sets of eight to form a unit called a byte (which is short for binary term). A byte can thus represent 256 different combinations (2^8 = 256). 256 combinations are sufficient to define each Latin alphabetical character, numbers from 0 through 9, along with multiple additional special characters or signs.

Padding with leading zeros

You might see binary values represented in bytes (or more), even if making a number 8-bits-long requires adding leading zeros. Leading zeros are one or more0’s added to the left of the most significant1in a number (E.g.00000100). You usually don't see leading zeros in a decimal number: 007 doesn't tell you anymore about the value of the number 7 (it might say something else). Leading zeros aren't required on binary values, but they do help present information about the bit-length of a number. For example, you may see the number 1 printed as00000001, just to tell you we're working within the realm of a byte. Both numbers represent the same value, however, the number with seven0’s in front adds information about the bit-length of a value.

3.0. - Hexadecimal

Hexadecimal, often just referred to as Hex, is a Base-16 numerical system using the digits 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, F. It's commonly used in programming because it can turn hard-to-read binary data into something human-readable.

Take a .jpg file as an example. It's made entirely of binary data, or 0s and 1s. If you were to open it in a text editor, you would see a bunch of "non-printable" characters (�) that don't make a lot of sense. But, by turning this binary data into hexadecimal, each byte is represented by a group of two hexadecimal digits (0-9 and A-F). This conversion enables humans to accurately interpret even the non-printable bytes. It makes the process simpler by rephrasing countless sequences of 0s and 1s into easy-to-read hexadecimal strings like FF D8 FF 4A 10, and so forth.

A byte consists of 8 bits, and each hexadecimal digit represents 4 bits.

Another common use of Hex involves hashing algorithms, which primarily generate output in binary. What sets Hex apart and makes it a preferred choice is its ability to portray this output nearly identical to its raw binary data form. This is unlike other encoding formats, which can come in multiple variants, such as Base64 - a topic to which is introduced later.

One thing to keep in mind: In JavaScript, numbers that start with 0x are treated as hexadecimal. For example, 0xFF is read as the decimal number 255 in JavaScript.

4.0. - Endianness

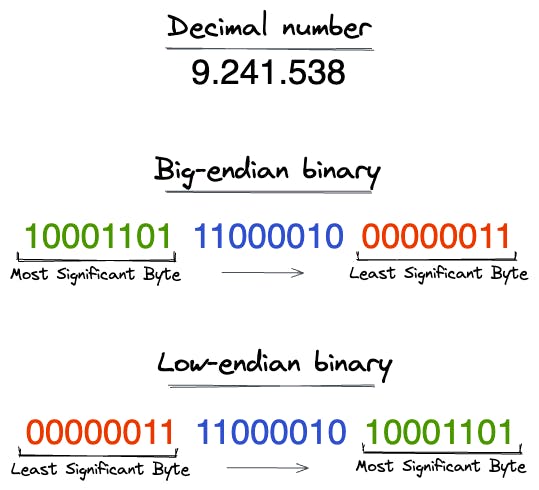

Just like different languages such as English and Arabic are read from opposite directions (left to right and right to left respectively), the sequence in which computers read and write bytes (and bits) also varies. This specific sequence in computers is referred to as Endianness.

Take the number 2.394 as an example. If you read it from left to right, the sequence will be: 2 -> 3 -> 9 -> 4. If instead, you read from right to left, it will be: 4 -> 9 -> 3 -> 2.

When talking about Endianness, we also discuss the Most Significant Byte (MSByte) and Least Significant Byte (LSByte). If you have the decimal number 3.972, the digit that can be changed to slightly increase or decrease the value is 2. Changing it to 3 will increase the overall number by only 1. Meanwhile, if you change the 3 in 3.972, the whole number goes up by a thousand. In this case, 2 is where the Least Significant Byte (LSByte) lies and 3 is where the Most Significant Byte (MSByte) is.

There are two types of Endianness: Big-endian (BE) and Little-endian (LE).

Big-endian (BE) starts storing from the biggest end. If you read multiple bytes in this format, you'll find the first byte is the biggest.

The Little-endian (LE) begins storing from the smallest end. If you're reading multiple bytes in this order, the first byte will be the smallest.

Different formats or orders in storing bytes are used by different types of systems. When the internet started growing, it became important for different systems to understand the order in which they should read data. Mainly for network protocols, Big-endian is the dominant order, which is why it's also known as the "Network order". On the other hand, most regular personal computers use the Little-endian format.

While higher-level languages often abstract many implementation details, endianness can still come into play in fields such as systems programming and data serialization, or when dealing with certain networking protocols.

Despite JavaScript and Node.JS being high-level languages in nature, they may still involve endianness concerns, particularly when leveraging APIs to handle binary data. You can delve deeper into this with this article, but we'll also scratch the surface of it later.

5.0. - ASCII

Given that a byte can represent any of the values 0 through 255 (making 256 numbers), anyone could arbitrarily make up a mapping between characters and numbers. For example, a video card manufacturer could decide that 1 represents A, so when the value 1 is sent to the video card it displays a capital 'A' on the screen. Another manufacturer of printers might decide for some obscure reason that 1 represented a lowercase 'z', meaning that complex conversions would be required to display and print the same thing.

Two concepts are important for understanding ASCII and the following Unicode:

Code points are numbers that represent the atomic parts of Unicode text. Most of them represent visible symbols but they can also have other meanings such as specifying an aspect of a symbol (the accent of a letter, the skin tone of an emoji, etc.).

Code units are numbers that encode code points, to store or transmit Unicode text. One or more code units encode a single code point. Each code unit has the same size, which depends on the encoding format that is used. The most popular format, UTF-8, has 8-bit code units. Code points are the unique values assigned to each character in a character set like ASCII or Unicode, whereas code units are the bit groupings that can represent a code point in a specific encoding form such as UTF-8, UTF-16, etc.

To avoid this happening, the American Standard Code for Information Interchange (ASCII) was invented. This is a 7-bit code, meaning there are 128 available code points (a number that uniquely identifies a given character) that can be assigned a character. This allocation method essentially embodies the concept of character encoding.

Wait, 7 bits? But why not 1 byte (8 bits)?

During the creation of ASCII in the '60s, the ASCII committee considered 7 bits to be sufficient to represent all American English characters and reduce data transmission costs. Moreover, the punched tape, a popular and inexpensive physical storage solution, had an 8-bit architecture. This allowed the use of the leftover 8th bit as a simple form of error detection (known as parity bit), adding an extra layer of protection against corrupted data during both transmission and storage.

The range of codes is divided up into two major parts; the first 32 non-printable and the 95 following printable characters. Printable characters are things like characters (upper and lower case), numbers and punctuation. Non-printable codes are for control (Backspace, Escape, ...) or the special NULL code which represents nothing at all.

For example, the string "Hello" translates to ASCII and bites as follows:

"H" -> ASCII: 72 -> bits:

01001000"e" -> ASCII: 101 -> bits:

01100101"l" -> ASCII: 108 -> bits:

01101100"l" -> ASCII: 108 -> bits:

01101100"o" -> ASCII: 111 -> bits:

01101111So, "Hello" is represented in ASCII code as72 101 108 108 111. Each character takes up exactly one byte in storage.

127 unique characters are sufficient for American English but becomes very restrictive when one wants to represent characters common in other languages. When computers and peripherals standardized on 1 byte (8 bits) in the 1970s, it became obvious that computers and software could handle text that uses 256-character sets at almost no additional cost in programming, and no additional cost for storage. This would allow ASCII to be used unchanged and provide 128 more characters. Many manufacturers devised new 8-bit character sets consisting of ASCII plus up to 128 of the unused codes, creating multiple 8-bit ASCII variations for French, Danish, Spanish,..., also referred to as extended ASCII. As there was no standard for extended ASCII, many problems arose when moving text from one computer to another. Moreover, what about the other languages needing an entirely different alphabet like Greek, Russian or Chinese? 128 characters would not cover enough, especially in Asian languages, which can have many thousands of unique characters.

Nowadays, when people mention the extended ASCII set, they're typically referring to the Latin-1 or ISO-8859-1 set.

6.0. - Unicode

A wider range of characters than ASCII is represented by Unicode. It can use up to 4 bytes. This gives us 4 x 8 bits = 32 bits or 2^32 = 4.294.967.295 unique combinations, creating much more room!

Unicode encompasses characters from various languages - such as English, Arabic, and Greek, among others - along with mathematical symbols, historical scripts, and even emojis. Although Unicode may not include every character from every language, its vast repertoire surely encompasses a majority of them.

As a superset of ASCII, Unicode shares the same meanings for the first 0–127 numbers, or 128 characters, as ASCII. For instance, the number 65 represents "Latin capital 'A'" in both standards.

Unicode is a standard that assigns code points to characters. As of September 2023, Unicode defines a total of 149,813 characters and continues to add new ones annually. Each character is represented by "U+", followed by four to six hexadecimal digits. For example, "A" is noted as U+0041 (or 41 in decimal), and the bicycle emoji "🚲" is U+1F6B2 (or 128690 in decimal). The word "Hello" is represented as U+0048U+0065 U+006C U+006C U+006F in Unicode.

Managing a wide-ranging set of characters can be challenging. This includes ensuring backward compatibility with legacy systems, effectively handling memory, space, and storage, and processing them efficiently. To address these issues, Unicode defines the three UTF (Unicode transformation format)encoding methods: UTF-8, UTF-16, and UTF-32, with each having their unique way of tackling these challenges.

6.1. - UTF-8: Size-Optimized

UTF-8 is a variable-length encoding system that uses 1, 2, 3 or 4 bytes (8, 16, 24, and 32 bits) for each character. This design makes UTF-8 fully compatible with ASCII. Like ASCII, UTF-8 only uses 1 byte for ASCII characters, ensuring that an ASCII file can be read correctly by a system anticipating UTF-8 input.

However, not all bits in multibyte characters are used to represent the character itself. Some bits are used to indicate the number of bytes used for the character's encoding:

1-byte character:

0xxxxxxx(128 characters)2-byte character:

110xxxxx 10xxxxxx(128 - 2048 characters)3-byte character:

1110xxxx 10xxxxxx 10xxxxxx(2048 - 65536 characters)4-byte character:

11110xxx 10xxxxxx 10xxxxxx 10xxxxxx(65.536 2.097.152 characters)

In 4-byte characters, only 21 bits (represented by the 'x's) are used to uniquely identify the actual character. This gives us a maximum of 2.097.152 possible characters, not 2^32 = 4.294.967.296 some might expect.

UTF-8 dominates the internet and fits Western Latin (European languages) characters perfectly, making it a natural choice for upgrading legacy applications. Except for languages like Chinese, Japanese or Korean (CJK) and special symbols or emojis, UTF-8 also tends to use the least memory. However, its variable-length nature makes it more computationally complex to parse, and string mutations can be tricky due to the variable 1-to-4-byte length of each character. We will explore this problem in more with UTF-16.

6.2. - UTF-16: Balance

UTF-16 employs a 16-bit (or 2-byte) encoding system. However, this configuration creates a ceiling of 65,536 (2^16) possible characters – a range found to be insufficient for today's standards.

In 1996, this limitation prompted the introduction of pairing two UTF-16 characters together. It did so through the reservation of two ranges with 1024 new reserved Unicode characters, ultimately creating an additional of 1,048,576 (1024 x 1024) characters. This move effectively increased the UTF-16 limit from 65,536 up to 1,112,064 ((65,536 - 2 x 1024) + (1024 x 1024)) possible characters. These pairings are also referred to as "Surrogate Pair" characters or non-BMP (Basic Multilingual Plane) characters. By expanding its range through surrogate pairing, UTF-16 established itself as a variable-length encoding system using either 2 or 4 bytes (16 or 32 bits).

The concept of surrogate pairs only applies when working with UTF-16.

The first set of reserved characters, also known as "high surrogate", ranges from U+D800 (55296) to U+DBFF (56319). The second set, known as "low surrogate", spans from U+DC00 (56320) to U+DFFF(57343). Unpaired surrogates are invalid in UTFs and are displayed as a "�" (question mark) symbol.

UTF-16 is theoretically more space-efficient for most mainstream languages but proves less so for Western languages. For instance, consider the Japanese character "家" (meaning "house" in English):

In UTF-8, it uses 3-bytes

11101000 10101010 10011110orE5 AE B6in hexIn UTF-16, it consumes only 2-bytes

10001010 10011110or5B B6in hex However, UTF-16 might not always be the most efficient option, even though the majority of languages, including Asian ones, frequently use characters like commas, periods, or Arabic numerals. These characters take up 1 byte in UTF-8, but 2 bytes in UTF-16. In fact, UTF-16 generally consumes about 50% more space than UTF-8 when encoding Western text, and only offers a 20% saving on Asian text.

Due to historical reasons, several programming languages such as JavaScript, Java, .NET and operating systems like Windows utilize UTF-16 encoding. Unicode was once limited to a 16-bit character capacity, implying a maximum of 65,536 (2^16) characters. These systems had to accommodate a multitude of languages and it was then believed to be sufficient to encompass all characters from all languages.

Furthermore, UTF-16 is plagued by the same string manipulation issue like UTF-8 due to the variable length.

For instance, executing the "Hello".length command in JavaScript reveals the quantity of UTF-16 characters employed, not the actual character count. The Chinese character "𠝹" (U+20779 or 20779), meaning "to cut", forms a surrogate pair in UTF-16. This means it utilizes two combined UTF-16 characters (0xD841 0xDF79). Splitting the character would yield an incorrect result.

console.log("𠝹".length) // 2

console.log("𠝹").slice(0,1) // � (<- '\uD841', the first surrogate pair)

In a UTF-8 aware language, the "𠝹" character would use up 4 bytes in UTF-8 (0xF0 0xA0 0x9D 0xB9 or in decimal 240 159 141 149). Slicing it anywhere would produce an invalid character.

Consequently, this mode of operation places UTF-16 in the middle ground. It's computationally less efficient than UTF-32 and theoretically consumes more space than UTF-8, this led to its quite unpopular choice today. Many contemporary programming languages such as Go and Rust now utilize UTF-8 and there is been a movement to abandon UTF-16 altogether at some point in the future.

6.3. - UTF-32: Performance

UTF-32 is a fixed-length encoding system which uses a steady 4 bytes (or 32 bits) per character, allowing for 2^32 = 4.294.967.296 possible characters. This uniformity makes UTF-32 efficient from a computational viewpoint, as there is no change in the byte size of each character, making string manipulations a breeze.

However, UTF-32's consistent use of 4 bytes can be a downside too. For every character in a Western language (read ASCII characters), you can find up to 26 leading zeros:

"!" in UTF-32:

00000000 00000000 00000000 00100001"!" in UTF-8 and ASCII:

00100001This excess makes it incredibly wasteful and results in text files that may occupy up to four times the disk space as compared to UTF-8.

Some lesser-known programming languages use UTF-32, primarily within internal applications where performance is important and memory or storage concerns are minimal. However, its usage is fairly minimal overall.

6.4. - Unicode Encodings used in web development: UTF-16 and UTF-8

In web development, two main Unicode encoding formats are used: UTF-16 and UTF-8.

JavaScript internally uses UTF-16, meaning that JavaScript strings are to be treated as UTF-16.

UTF-8, on the other hand, is used for file encodings, like HTML and JavaScript files. This is the standard these days, and most HTML files start with the specification for UTF-8 as the charset in the HTML head section. Even for HTML modules loaded in web browsers, UTF-8 remains the standard encoding.

JavaScript stored in UTF-8 but runs on UTF-16?

JavaScript engines will decode the source file (which is most often in UTF-8) and create a string with two UTF-16 code units.

7.0. - Base64

Now that we have a system to represent the huge number of characters, a new issue has surfaced, which applies not only to these characters but also to files. Many original communication protocols could only transmit human-readable English text, specifically printable ASCII characters. This limitation posed issues for non-printable-ASCII characters such as line breaks, special symbols, the transmission of files, or characters from other languages (like "ø").

To overcome this limitation, Base64 was introduced as an encoding method that converts any media type (text, images, audio files, etc.) into printable ASCII characters that can be smoothly sent across channels designed to handle these characters primarily. Base64 is a binary-to-text encoding format, unlike ASCII and UTF's, which are character encoding formats.

Binary-to-text vs Character Encoding Formats

Character encoding formats are used to represent characters in a form that computers can understand and display to users. Each character, like "Æ", is assigned a unique binary value, such as "11000011 10000110". This scheme is mainly for processing and displaying text appropriately on computers. On the other hand, binary-to-text encoding formats have a different aim: they are designed to convert any binary data (not just text) into a text format that can be safely sent over networks and systems that are designed to handle text. This includes binary files like images, audio files, etc.

7.1. - How Base64 works

The name Base64 stems from its base-64 number system, using 64 unique characters: alphanumeric symbols (a-z, A-Z, 0-9), +, and /. The = character isn't part of the Base64 character set, but it serves the purpose of padding.

Below are a few examples highlighting how characters are represented in Base64:

"A" -> Base64: 0 -> bits

000000"B" -> Base64: 1 -> bits

000001"a" -> Base64: 26 -> bits:

011010"/" -> Base64: 63 -> bits:

111111

Base64 operates with groups of four 6-bit chunks. So, three 8-bit chunks of input data (3x8 bits = 24 bits) can be represented by four 6-bit chunks of output data (4×6 = 24 bits). This means that the Base64 version of a string or file is typically roughly a third larger than its source (depends of course on various factors)

This process can be better illustrated using "Væg" (the Danish word for "wall") as an example:

"Væg"'s binary form in UTF-8 is

01010110 11000011 10100110 01100111, four 8-bit chunks.This is broken down into the following 6-bit chunks:

010101 101100 001110 100110 011001 11, resulting in five and a partial chunk.Take the first group of four chunks for encoding. Each chunk is encoded into Base64:

010101translates to "V"101100translates to "s"001110translates to "O"100110translates to "m"

The second, final, group of one full and one partial chunk. Fill up the partial chunks with zeros to make it a full 6-bit chunk. So,

011001 11becomes011001 110000. These chunks are then encoded:011001translates to "Z"110000translates to "w"

As the final group is two chunks short to form a group of 4 6-bit chunks, padding is applied using

=, resulting in the final outputVsOmZw==.

7.2. - Base64 Variants

The problem with Base64 is that it contains the characters +, /, and =, which has a reserved meaning in some filesystem names and URLs. Thus, Base64 exists in several variants that function similarly, encoding 8-bit data into 6-bit characters, but replacing those characters.

Here are a few examples:

Base64 Standard: This is the most common variant using A–Z, a–z, 0–9,

+, and/as its 64 characters, with=for padding.MIME Base64: This variant is used in emails and is similar to the standard version. However, it adds a newline character (

\n) after every 76 characters to ensure compatibility with a range of email systems.Base64url: A URL-safe variant, this version uses

-and_in place of+(space) and/(path delimiter) so as not to interfere with the URL. It's employed when the Base64 encoded data needs to be a part of both a URL or even a file name in file directories.

8.0. - Percent-Encoding (URL Encoding)

Percent-encoding, also known as URL encoding, is a mechanism that encodes characters that have a special meaning in the context of URL's.

For example, when you submit a form on a web page, the form data is often included in the URL as a query string. If any of these inputs include special characters like a slash (/) or hash (#), they would need to be percent-encoded, otherwise, they would be confused with the URL itself, instead of the data, and disrupt the URL's structure..

Special characters, also known as reserved characters, needing encoding are: :, /, ?, #, [, ], @, !, $, &, ', (, ), *, +, ,, ;, =, as well as % itself. Other characters don't need to be encoded, though they could. Depending on the context, the "space" character is translated to + or %20.

Percent-encoding vs Base64url?

Percent-encoding should not be confused with Base64url. While you could also use Base64url to encode form inputs or other textual data in a URL safe format it serves a different purpose. Base64url is used for encoding binary data that can be any data type, not just strings. Consider JSON Web Tokens (JWTs) as an illustrative example. These tokens, which may be integrated into URL’s, consist of three parts. The first two are JSON objects (aka strings) - the header and the payload. These two objects are hashed, thus forming the last part, the signature. Why is this relevant to our current discussion? Because the result of a hash operation is raw binary data (0's and 1's). They are occasionally showcased in hexadecimal form to make them somewhat human-readable. As a result, Base64url is used in such cases and even stated explicitly in the JWT specification, instead of Percent-encoding.

The default encoding mechanism used by browsers and mandated by the URL specification when encountering special characters in URL's is Percent-Encoding. For instance, if you were to open a local file named /Users/Mario/website/#.html in Chrome, it would be displayed as /Users/Mario/website/%23.html in the URL address bar.

JavaScript has built-in encodeURIComponent() and decodeURIComponent() to handle URL-encoded format.

9.0. - Binary & Encoding in JavaScript

When working with binary data in JavaScript, several methods and utilities are at your disposal.

9.1. - Helper methods: String.codePointAt(), String.codePointAt(), Number.toString() and parseInt()

Here's a starting point by understanding a few basic methods that we'll use to work with binary in JavaScript:

String.codePointAt(<index>): This method returns the Unicode decimal value of a character at a specified index in a string.console.log("ABC".codePointAt(0)); // 65 console.log("ABC".codePointAt(1)); // 66 console.log("ABC".codePointAt(2)); // 67 // Warning: Emoji and Asian characters present issues. console.log("👍".codePointAt(0)); // 128077 <- Correct Unicode value console.log("👍".codePointAt(1)); // 57209 <- Second Surrogate pairString.charCodeAt(<index>): This method retrieves the UTF-16 decimal value of a character at a specified index in a string. UnlikeString.codePointAt(), it reads the surrogate character for each pair value, instead of the whole Unicode value. It's less efficient.console.log("ABC".charCodeAt(0)); // 65 console.log("ABC".charCodeAt(1)); // 66 console.log("ABC".charCodeAt(2)); // 67 // Emoji and Asian characters are fully supported. console.log("👍".charCodeAt(0)); // 55357 <- First surrogate pair console.log("👍".charCodeAt(1)); // 57209 <- Second surrogate pairNumber.toString(<base-X>): This function facilitates conversion between different numerical bases.console.log((123).toString(32)); // "3r" in Base-32 console.log((123).toString(16)); // "7b" in Base-16 or Hexadecimal console.log((123).toString(10)); // "123" in Base-10 or Decimal console.log((123).toString(2)); // "1111011" in Base-2 or BinaryWhy the need for parenthesis?

You might wonder why we can't simply do123.toString(32)which throws an error? Well, in JavaScript, all numbers are stored as 64-bit floating point numbers. If you try123.toString(32)directly, JavaScript waits for potential decimals after123.(e.g.123.0but when it gets totoString(32), it gets confused and gives an error. Now if you use parentheses like(123).toString(32), JavaScript can identify123as a whole number first, and then apply thetoString(32)to it, and runs fine. It's like telling JavaScript very clearly, "Hey, this is the whole number, and now do thistoString(32)thing on it".

Alternatively, in JavaScript, because123 === 123. === 123.0, you can achieve the same with two dots (..), as in123..toString(32).parseInt(<string>, <base-X>): This method returns the decimal equivalent of a given string in a specified base, or base-10 by default.console.log(parseInt("3r", 32)); // 123 Base-32 to Number console.log(parseInt("7b", 16)); // 123 Base-16 or Hexadecimal to Number console.log(parseInt("123", 10)); // 123 Base-32 or Decimal, stays the same console.log(parseInt("1111011", 2)); // 123 Base-2 or Binary to NumberString.padStart(<length>, <characters>): This method adds specified characters at the beginning of a string until it reaches a specified length.console.log("1".padStart(8, "0")); // "00000001" console.log("234".padStart(4, "1")); // "1234"

Heads Up: Converting Strings into UTF-X Systems Is Not Straightforward

At this point, you could start thinking about converting a string into UTF8, UTF-16 and UTF-32 back and forth using the above methods. Don't. Converting between those is a complex task:

UTF-8 can change its byte format after the first 128 Unicode characters. Things get complicated and require handling different cases:

"A" position 41:

"A".codePointAt(0).toString(16); // '41'gives the right value"Æ" position 198:

"Æ".codePointAt(0).toString(16); // 'c6'doesn't give the right UTF-8 byte view. The correct one would bec3 86.

UTF-16 is somewhat also variable length. After Unicode 65536, characters are made up of surrogate pairs, thus we have also to handle each surrogate pair, requiring special handling. Furthermore, characters with a Unicode value below 4096 require padding to fill up the 2-byte.

"Æ" position 198:

"Æ".codePointAt(0).toString(16); // 'c6'doesn't give the correct UTF-16 byte view. The correct one would be"Æ".codePointAt(0).toString(16).padStart(4, "0"); // '00c6'to make it a full 2-byte."👍" position 128.077:

"👍".codePointAt(0).toString(16); // '1f44d'gives the wrong UTF-16 representation. It's a surrogate pair character, hence we should do"👍".charCodeAt(0).toString(16) + "👍".charCodeAt(1).toString(16); // 'd83d dc4d'

UTF-32 doesn't have complex limitations except that Unicode values below 16,777,216 always need padding:

"A" position 41:

"A".codePointAt(0).toString(16).padStart(8, "0"); // '00000041'"Æ" position 198:

"Æ".codePointAt(0).toString(16).padStart(8, "0"); // '000000c6'"👍" position 128.077:

"👍".codePointAt(0).toString(16).padStart(8, "0"); // '0001f44d'

In the examples above, we use

toString(16)to get the hexadecimal representation of the value. Don't mix it up with UTF-8 ->toString(8), UTF-16 ->toString(16)or UTF-32 ->toString(32).

See the above methods as basic methods to perform simple or direct tasks. Today, more advanced APIs are available to work with not just UTF-8, UTF-16 and UTF-32, but with Base64, Hex or binary in general.

9.2. - ArrayBuffer

ArrayBuffer is where we begin. This object represents raw binary data, in an array of bytes (8-bits). An ArrayBuffer instance is also known as a "buffer" and is simply binary-data stored in memory, and there's absolutely nothing more than this. The fixed size of the buffer is defined at creation time, and cannot be modified later, and each element of the buffer is automatically initialized to 0 to ensure that previous buffer values are not revealed.

let arrBuffer = new ArrayBuffer(16); // create an array buffer of size 16 bytes. Each array item is initialized to 0.

console.log(arrBuffer.byteLength); // 16-bytes = 16 x 8 = 128-bits

But ArrayBuffer doesn’t allow you to manipulate individual bytes. It's just a reference to the actual data.

Interacting with ArrayBuffer Through Views

Interacting with ArrayBuffer is not possible without defining a "view". A view is a class that wraps around an ArrayBuffer instance and provides the possibility to read and write the underlying data. There are two kinds of views: typed arrays and DataView.

Consider

ArrayBufferas a private variable in a class, with typed arrays andDataViewacting like public methods for interacting with this variable.

Typed Arrays vs. DataView

The fundamental difference between TypedArray and DataView lies in their particular utilization and flexibility in dealing with ArrayBuffer.

Typed arrays: A family of classes that offer an

Array-like interface for manipulating binary data. They are constrained to a single data type and a fixed length. Examples includeUint8Array,Uint16Array,Int8Array, etc. Typed arrays use the endianness of the host system.DataView: This class provides agetter/setterAPI for reading or writing data bytes to anArrayBufferat a particular offset. It allows you to access the data as elements of different types (e.g., Uint8, Int16, Float32) at any position.DataViewallows endianness to be specified.

Typed arrays and DataViews don't have prefered use cases, but rather different strengths that make them more suitable for handling the the underlying data TypedArrays performs well when handling binary data within the same system as there is no variation in endianness, thus making it suitable for useful for heavy data processing, interacting with canvas 2D/WebGL, creating audio and video data, encryption, and transferring binary data to a server or via web sockets and data channels for WebRTC.

DataView on the other hand provides a safe way to deal with binary data transferred between systems due to it not being fixed to the host systems' endianness, but allows for flexible endianness support, thus making it suitable for situations that involve files (since these are commonly shared over the internet, between systems, and bytes are stored in various offsets and sizes) like images or interaction with various protocols that define a specific endian order like SMTP, FTP and SSL.

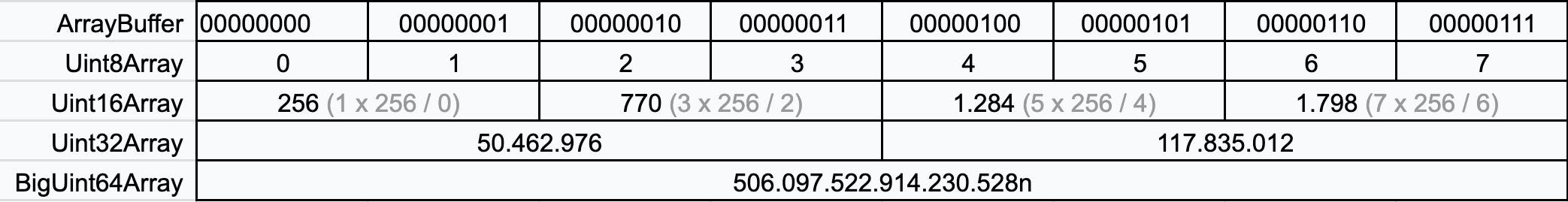

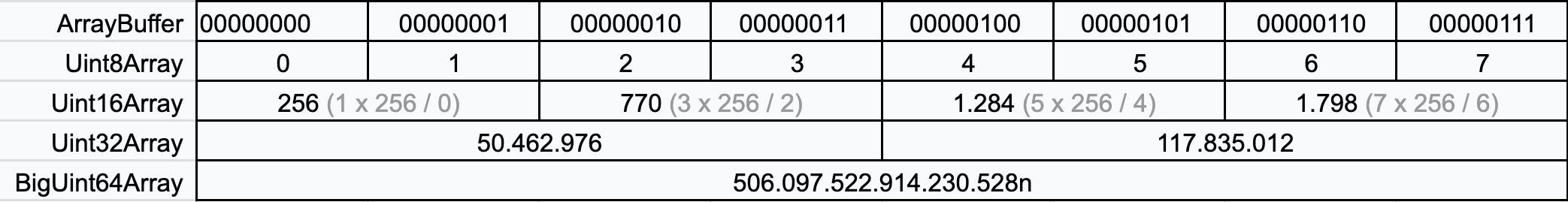

Typed Arrays: Array-Like Interaction with ArrayBuffer

Typed arrays provide an array-like interface for working with an ArrayBuffer. Similar to regular arrays, you can iterate over array items, access bytes at specific indexes, and use well-known array manipulation methods like .map(), .join(), or .includes(). Typed Arrays constrains you to view ArrayBuffer as a single data type (Uint8, Uin16, ...) and a fixed-array length.

Each typed array class interprets the bytes in the ArrayBuffer in a specific way, as described in the following table:

| Class | Description |

Uint8Array | Each array element is interpreted as 1 byte, resulting in an unsigned 8-bit integer, having a range of 0 to 255. |

Uint16Array | Each array element is interpreted as 2 bytes, resulting in an unsigned 16-bit integer, having a range of 0 to 65535. |

Uint32Array | Each array element is interpreted as 4 bytes, resulting in an unsigned 32-bit integer, having a range of 0 to 4294967295. |

Int8Array | Each array element is interpreted as 1 byte, resulting in a signed 8-bit integer, having a range of -128 to 127. |

Int16Array | Each array element is interpreted as 2 bytes, resulting in a signed 16-bit integer, having a range of -32768 to 32767. |

Int32Array | Each array element is interpreted as 4 bytes, resulting in a signed 32-bit integer, having a range of -2147483648 to 2147483647. |

Float32Array | Each array element is interpreted as 4 bytes, resulting in a 32-bit floating point number, having a range of -3.4e38 to 3.4e38. |

Float64Array | Each array element is interpreted as 8 bytes, resulting in a 64-bit floating point number, having a range of -1.7e308 to 1.7e308. |

BigInt64Array | Each array element is interpreted as 8 bytes, resulting in a signed BigInt, having a range of -9223372036854775808 to 9223372036854775807, though BigInt can represent larger numbers. |

BigUint64Array | Each array element is interpreted as 8 bytes, resulting in an unsigned BigInt, having a range of 0 to 18446744073709551615, though BigInt can represent larger numbers. |

(Uint = unsigned = positive only; Int = signed = negative and positive)

For representing even bigger ranges, there are classes available such as Float64Array or BigUint64Array.

The table below demonstrates how 16 bytes in an ArrayBuffer is interpreted when viewed using different typed array classes.

Note: The

TypedArrayview is invisible to the global scope and can only be invoked through one of its view methods. It is a collective term to refer to all these view objects.

Let's create an Int32Array typed array from an ArrayBuffer. The following code will interpret the arrBuffer as a sequence of 32-bits or 4-byte per array element. We can then read/write the 32-bit representations using array indexing:

let buffer = new ArrayBuffer(16);

let int32View = new Int32Array(buffer); // treat arrBuffer as a sequence of 32-bits, or 4-byte per array element

console.log(int32View); // Outputs: Int32Array(4) [0, 0, 0, 0]

int32View[0] = 42; // write to int32View array

console.log(int32View[0]); // Outputs: 42

You might be surprised to find that the length of int32View.length is actually 4, not 16. That's because every element in Int32Array takes up 4 bytes. So an ArrayBuffer of length 16 bytes encompasses 16 / 4 = 4 times 32-bit integers.

And remember, in the computer's memory, it's all still the same continuous line of binary data, just like the original ArrayBuffer. Typed arrays are merely interpretive views layered on top of this raw binary data.

Consider the following example where we use two different typed array views on the same underlying ArrayBuffer:

let arrBuffer = new ArrayBuffer(16);

let int16View = new Int16Array(arrBuffer); // treat the arrBuffer as a sequence of 16-bits, or 2-byte per array element

int16View[0] = 12345;

let int8View = new Int8Array(arrBuffer); // treat the arrBuffer as a sequence of 8-bits, or 1-byte per array element

console.log(int8View[0]); // 57 (the least significant byte from 12345)

console.log(int8View[1]); // 48 (the next byte from 12345)

console.log(int8View[2]); // 0

Notice that the original Int16Array and the derived Int8Array shares the same memory. If you alter the buffer via one view, you'll see the changes in the other.

If the total size of the

ArrayBufferdoesn't perfectly divide by the size of an element in our typed array (e.g.1,2or4), then we won't be able to access the "leftover" space. Thus creating a partial typed array will result in an error:let buffer = new ArrayBuffer(7); let view = new Int16Array(buffer); // Error: byte length of Int16Array should be a multiple of 2

DataView: Getter and Setter methods on the ArrayBuffer

The DataView class provides an getter/setter API that allows you to read and manipulate the data in an ArrayBuffer at a particular offset. It's important to note that although the underlying data is in the form of an ArrayBuffer, the DataView does not offer array-like features, such as looping or includes() methods to manage the underlying ArrayBuffer. However, in contrast to typed Arrays which are restricted to a single data type, DataView is not constrained to a single data type, allowing for manipulating various data types within the same buffer.

The following getter and setter methods are available on DataView:

| Getters | Setters |

getBigInt64() | setBigInt64() |

getBigUint64() | setBigUint64() |

getFloat32() | setFloat32() |

getFloat64() | setFloat64() |

getInt16() | setInt16() |

getInt32() | setInt32() |

getInt8() | setInt8() |

getUint16() | setUint16() |

getUint32() | setUint32() |

getUint8() | setUint8() |

Here's how to create a DataView and set the first byte to 3.

const arrBuffer = new ArrayBuffer(4); // [0, 0, 0, 0]

const dView = new DataView(arrBuffer);

dView.setUint8(0, 3); // write value 3 at byte offset 0 -> [3, 0, 0, 0]

console.log(dView.getUint8(0)); // 3

DataView is ideal for processing mixed data from the same data source, such as data from a .jpg or .gif file, where initial bytes may contain different types of information like file type, image size, version, etc. These information pieces occupy different lengths in the file. Looking at an imaginative media example, where the first bytes are

4 bytes: File Type Identifier,

JPG4orGIF2?2 bytes: Image height

2 bytes: Image width

4 bytes: File size

Reading such data with DataView could be done with:

// Assuming `buffer` is your file data in ArrayBuffer.

let dView = new DataView(buffer);

// Read the File Type Identifier.

let fileTypeIdentifier = '';

for (let i = 0; i < 4; i++) { // Uint32 occupies 4 bytes.

fileTypeIdentifier += String.fromCharCode(dView.getUint8(i));

}

console.log('File Type Identifier: ', fileTypeIdentifier); // " JPG"

// Read the Image Height.

let imageHeight = dView.getUint16(4, false); // Uint16 occupies 2 bytes.

console.log('Image Height: ', imageHeight); // 600

// Read the Image Width.

let imageWidth = dView.getUint16(6, false);

console.log('Image Width: ', imageWidth); // 800

// Read the File Size.

let fileSize = dView.getUint32(8, false); // Uint32 occupies 4 bytes.

console.log('File Size: ', fileSize); // 50.000

Why can't we use

getUint32for the File Type Identifier?

ThegetUint32method retrieves four bytes and interprets them as a single 32-bit unsigned integer number. However, the file identifier consists of four separate ASCII characters. Therefore, to properly collect these characters as individual entities, we need to retrieve each one with thegetUint8method which retrieves one byte at a time and converts it into an ASCII character. DoingdView.getUint32(0, false);would return the decimal541741127number which doesn't give us any information about the File Type Identifier.

Endianness in DataView

Every getter method in DataView includes an endianness parameter that you can specify as the second argument (view.getXXX(<offset> [, littleEndian])). Likewise, setter methods let you set the endianness as a third argument (view.setXXX(<offset>, <value> [, littleEndian])). When you set the endianness argument to true, it denotes little-endian order. If you set it to false or leave it undefined, it signifies big-endian order.

Here's a brief example illustrating this:

// Creation of an ArrayBuffer with a byte size

var buffer = new ArrayBuffer(16);

var dView = new DataView(buffer);

// Setting the bytes using DataView's setInt32 method

dView.setInt32(0, 123456789, false); // Big-endian write

dView.setInt32(4, 123456789, true); // Little-endian write

// Reading the bytes back using DataView's getInt32 method

console.log(dView.getInt32(0, false)); // Big-endian read: 123456789

console.log(dView.getInt32(4, true)); // Little-endian read: 123456789

// Attempting to read the bytes with incorrect endianness

console.log(dView.getInt32(0, true)); // Little-endian read returns: 365779719

console.log(dView.getInt32(4, false)); // Big-endian read returns: 365779719

Why does incorrect endianness return a value of

365779719instead of123456789reversed?

When you write123456789into DataView at position 0, it is stored in big-endian format (represented in Hexadecimal as07 5B CD 15). Attempting to read it back as little-endian withgetInt32(0, true)interprets the bytes in reversed order (Hex:15 CD 5B 07), which results in a different number-365779719. This is because endianness changes the byte order, not the decimal digits. Therefore reversing the byte order doesn't imply reversing decimal digits.

9.3. - Buffer

Prior to the introduction of TypedArray in ECMAScript 2015 (ES6), the JavaScript language had no mechanism for reading or manipulating streams of binary data. The Buffer class was introduced as part of the Node.js API to make it possible to interact with binary data in the context of things like TCP streams and file system operations.

It has since been re-implemented as a subclass of Uint8Array due to the removal of a functionality in the underlying V8 engine of Node.js, that would have broken Buffer.

Even though it now extends Uint8Array, Buffer remains widely used in Node.js due to its extensive API, including built-in base64 and hex encoding/decoding (and others), byte-order manipulation, and encoding-aware substring searching. To give you an idea, Buffer offers approximately 30 more methods than TypedArray.

Below is an example of how to use it:

let buffer = Buffer.from('H€llö, wørld!', 'utf-8'); // "ö", "ø" use 2-bytes, "€" uses 3-bytes

console.log(buffer.toString()); // "H€llö, wørld!"

// Conversion into other encoding formats

console.log(buffer.toString("base64")) // "SOKCrGxsw7YsIHfDuHJsZCE="

console.log(buffer.toString("hex")) // "48e282ac6c6cc3b62c2077c3b8726c6421"

console.log(buffer.toString("utf16le")) // "겂汬뛃썷犸摬"

The final result "겂汬뛃썷犸摬" makes sense because the buffer is being converted into a different encoding (utf16le -> utf-16) than it was originally encoded in (utf-8), causing a mismatch leading to incorrect character interpretation.

9.4. - TextEncoder

The TextEncoder.encode() object takes a string and converts it into its UTF-8 binary representation and puts it into an Uint8Array. This byte sequence can then be stored, sent, or processed more efficiently because it's in raw binary format, the native language of computers.

To illustrate its use, here's an example where TextEncoder is used to encode the '€' character into its corresponding UTF-8 byte representation in a byte array:

let encoder = new TextEncoder();

let euroArray = encoder.encode('€');

console.log(euroArray); // Uint8Array(3) [226, 130, 172]

In this code, the TextEncoder object is first assigned to encoder. It is then used to encode the character '€' and store the result in euroArray. When logging euroArray, you will see the UTF-8 byte representation of '€'. The result is a Uint8Array of three elements: [226, 130, 172].

9.5. - Base64 Encoding

The process of encoding and decoding data to Base64 differs in Node.JS and other environments.

In a Node.JS environment, you can simply use Buffer to encode/decode data to Base64.

However, in other environments, such as a browser, JavaScript has two native methods that handle the process:

btoa- Encodes an ASCII string (consisting of the extended set, Latin-1, 256 characters) into a Base64 string. This term is often misleading as it represents "binary to ASCII," even though it accepts ASCII data and not binary.atob- Decodes a Base64 string into an ASCII string. Similarly, it is also a misleading term that stands for "ASCII to binary."

Important: These methods only support ASCII characters. They do not support emojis, Asian characters, or anything outside the 256 extended ASCII character set. This limitation exists because binary data types were not available at the time the methods were added to JavaScript.

Here is an illustration of how the btoa method works:

console.log(btoa("Hello world!")); // "SGVsbG8gd29ybGQh"

console.log(btoa("€")); // Error: The string to be encoded contains characters outside of the Latin1 range.

While

btoaandatobare also accessible in Node.JS, they are labeled as deprecated to promote the usage ofBuffer. When developing frontend in a Node.JS environment, such as React, your IDE might produce a "deprecated warning". You can eliminate this warning by calling the methods on the global window objectwindow.btoaandwindow.atob.

Encoding non-ASCII characters using btoa

If you need to encode a string that contains characters beyond the ASCII range, you can first convert the string into ASCII and then parse it with btoa. This is a widely recognized workaround from MDN.

Here's how it's done:

const arrBuffer = new TextEncoder().encode("€"); // Uint8Array [226, 130, 172]

const asciiStr = String.fromCodePoint(...arrBuffer) // "â\x82¬"

console.log(btoa(asciiStr)); // "4oKs"

This code works in the following way:

new TextEncoder().encode("€"): Converts "€" into its corresponding UTF-8 binary representationUint8Array [226, 130, 172], which is11100010 10000010 10101100in binary.String.fromCodePoint(...arrBuffer): Generates an ASCII string that corresponds to each value inarrBuffer. WhileString.fromCodePointalso handles values beyond the ASCII 256 range, in this situation it converts each byte (byte = max size of 256) separately, ensuring ASCII characters only. This means thatString.fromCodePoint(226, 130, 172)returns"â\x82¬". The UTF-8 form of "€" and the ASCII combination of "â", "\x82", and "¬" share the identical binary format, emphasizing the aim of Base64 - encoding binary into text. With our UTF-8 to ASCII, we focus on the binary representation and not the text.btoasimply hides the underlying binary conversion process by accepting text directly and encoding it into Base64:The UTF-8 binary form of "€":

11100010 10000010 10101100The ASCII binary form of "â\x82¬":

11100010 10000010 10101100:Unicode 226 -> Binary conversion:

11100010-> ASCII: "â"Unicode 130 -> Binary conversion:

10000010-> ASCII control character: "\x82"Unicode 172 -> Binary conversion:

10101100-> ASCII: "¬"

btoathen encodes this ASCII string, which results in the Base64 encoding of "€".

Streaming

When working with large data like big images or files, it's recommended to work with chunks of data at a time making this process more memory efficient. This is particularly useful when working with large data sets that could potentially exceed available memory or be inefficient in processing all at once.

In Base64 encoding with streams, the input data is read in small chunks, converted to Base64 format, and gradually written into the output stream. This reduces memory usage as the entire data doesn't need to be loaded into memory all at once, especially beneficial for large data sets. It also allows the encoded data to be fed into the next part of the system or sent over the network as soon as a chunk is processed, without waiting for the whole data to be converted.

The btoa() function by itself doesn't directly support streaming, as it's a synchronous function designed for encoding data to Base64 in the browser environment.

In Node.js, you would create a readable stream from the source using const stream = fs.createReadStream('file.txt');. As you read from the stream in chunks, you convert the buffer data into Base64 for each string iteration using 'buffer.toString('base64')', within a 'data' event handler, like:

stream.on('data', (chunk) => {

let base64data = chunk.toString('base64');

// asynchronously write chunk to HTTP response, can be called multiple times

res.write(base64data);

});

stream.on('end', () => {

// end HTTP response when the stream ends

res.end();

});

9.6. - Blob

A binary large object (Short: "Blob") is a group of variable amounts of binary data, stored as a single entity.

In JavaScript, the Blob object is an immutable Web API commonly used for representing files. Blob was initially implemented in browsers (unlike ArrayBuffer which is part of JavaScript itself), but it is now supported in NodeJS.

It isn't common to directly create Blob instances. More often, you'll receive instances of Blob from an external source (like an <input type="file"> element in the browser) or library. That said, it is possible to create a Blob from one or more string or binary "blob parts".

const blob = new Blob(["<html>Hello</html>"], {

type: "text/html",

});

blob.type; // => text/html

blob.size; // => 19

These parts can be string, ArrayBuffer, TypedArray, DataView, or other Blob instances. The blob parts are concatenated together in the order they are provided. The contents of a Blob can be asynchronously read in various formats.

await blob.text(); // => <html><body>hello</body></html>

await blob.arrayBuffer(); // => ArrayBuffer (copies contents)

await blob.stream(); // => ReadableStream

10.0 - Wrapping up

Here's a summarization of some key takeaways:

People are good with text, computers are good with numbers. Computers use numbers to represent characters in a process called character encoding.

Base systems like base-10, base-2 (binary), base-8 (octal), and base-16 (hexadecimal) are used to count and represent numbers in different ways

ASCII is a character encoding standard that assigns numbers from 0 to 127 to represent characters, primarily for American English. The extended, Latin-1, version represents 0 to 255 characters.

Unicode is a character encoding standard that can represent a wider range of characters from different languages and scripts, encompassing over 149,000 characters

UTF-8, UTF-16, and UTF-32 are character encoding formats that represent Unicode characters using different binary patterns

Base64 is a binary-to-text encoding format that converts binary data into ASCII characters for safe transmission and storage

Percent-encoding, also known as URL encoding, is a mechanism for encoding characters with special meanings in URLs to ensure proper interpretation by systems

Typed arrays are used to work with binary data in JavaScript, providing efficient and flexible ways to read and manipulate raw binary data

The

Bufferclass in Node.js provides a large API for working with binary data, including Base64 encoding and decoding.The TextEncoder object in JavaScript can be used to convert strings into their UTF-8 binary representation.

Use Streaming for large data.

Here are some additional resources if you're interested in diving deeper into these topics: